Understanding what determines the transition from memorization to generalization is now a central question at the heart of global research in AI.

In a recent study, Giulio Biroli, Tony Bonnaire and Raphaël Urfin of LPENS laboratory, in collaboration with Marc Mézard (Bocconi University), uncovered the fundamental mechanism that enables generative models to escape memorization and acquire true creative capability.

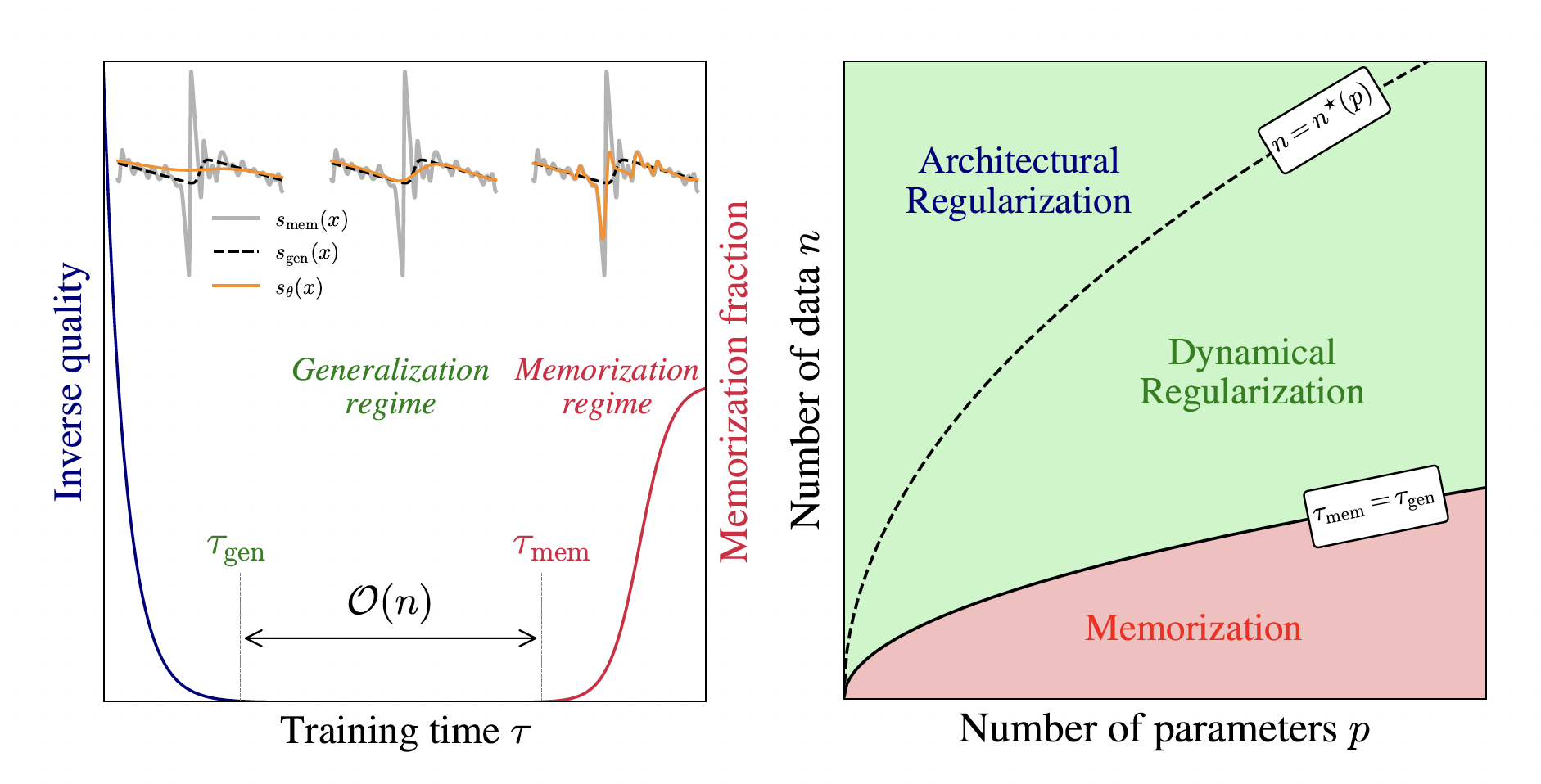

Using an approach that combines statistical physics, computer science, and numerical experiments, the team demonstrated that when the training dataset is very large, the learning process begins in a phase of generalization. Only after an extremely long training time does full memorization of the data become possible. In other words, the larger the dataset, the longer it takes to memorize.

These results show that a sufficiently powerful AI can, in principle, eventually memorize all the examples it is given, but that in most practical situations, the required time becomes so large due to the volume of training data that the AI remains in a regime of generalization—and therefore genuine creativity.

The work, submitted to NeurIPS 2025 and accepted for an oral presentation, received the Best Paper Award at NeurIPS 2025.

This is a highly prestigious distinction: only 4 papers were selected from the 21,575 submitted (5,290 accepted).

Diffusion models learn first to generalize and then only at large times — proportional to the size of the dataset — they memorize. We present an illustration of the this phenomenon on the left figure, and a resulting phase diagram on the right. The latter shows that the phenomenon of implicit dynamical regularization leads in practice to a large window of parameters in which diffusion models avoid memorization and are able to generalize.

More:

– Announcement on NeurIPS 2025 conference website

– The article

Affiliation author:

Laboratoire de physique de L’École normale supérieure (LPENS, ENS Paris/CNRS/Sorbonne Université/Université de Paris)

Corresponding author : Giulio Biroli

Communication contact: Communication team